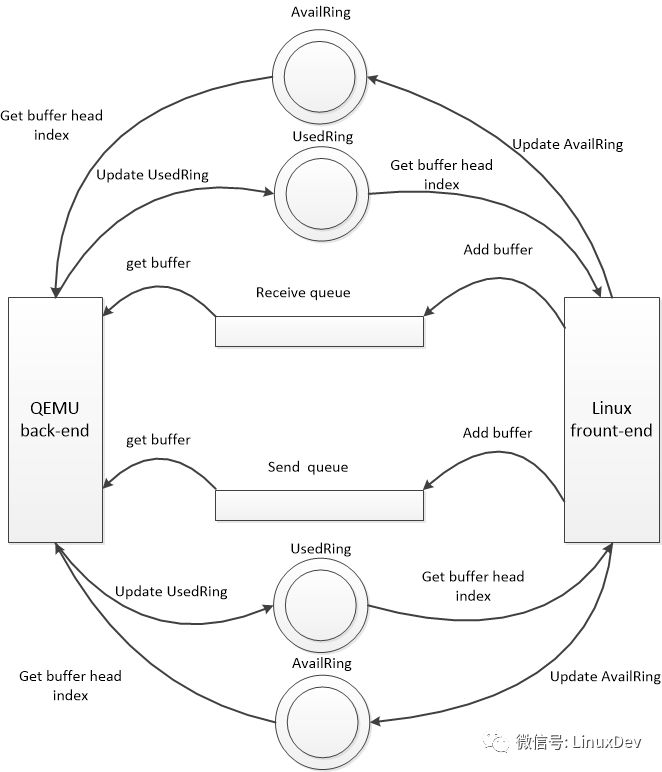

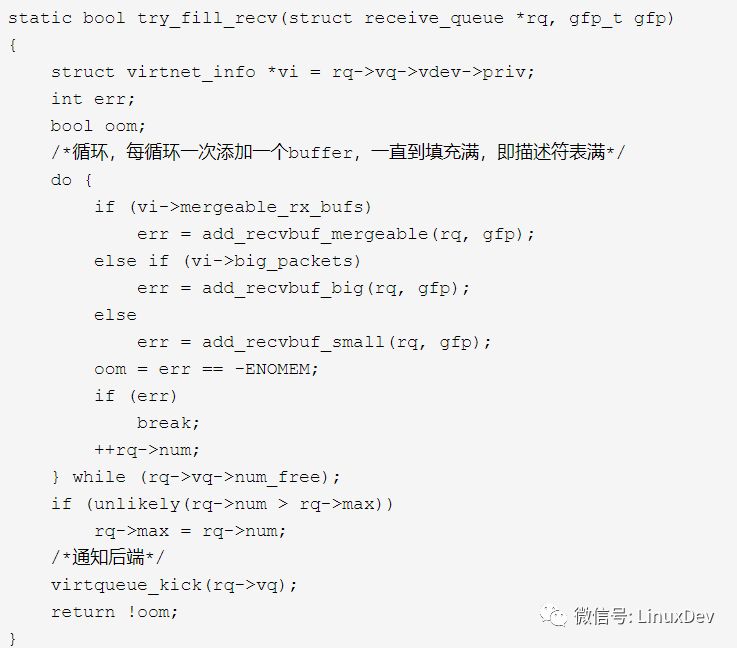

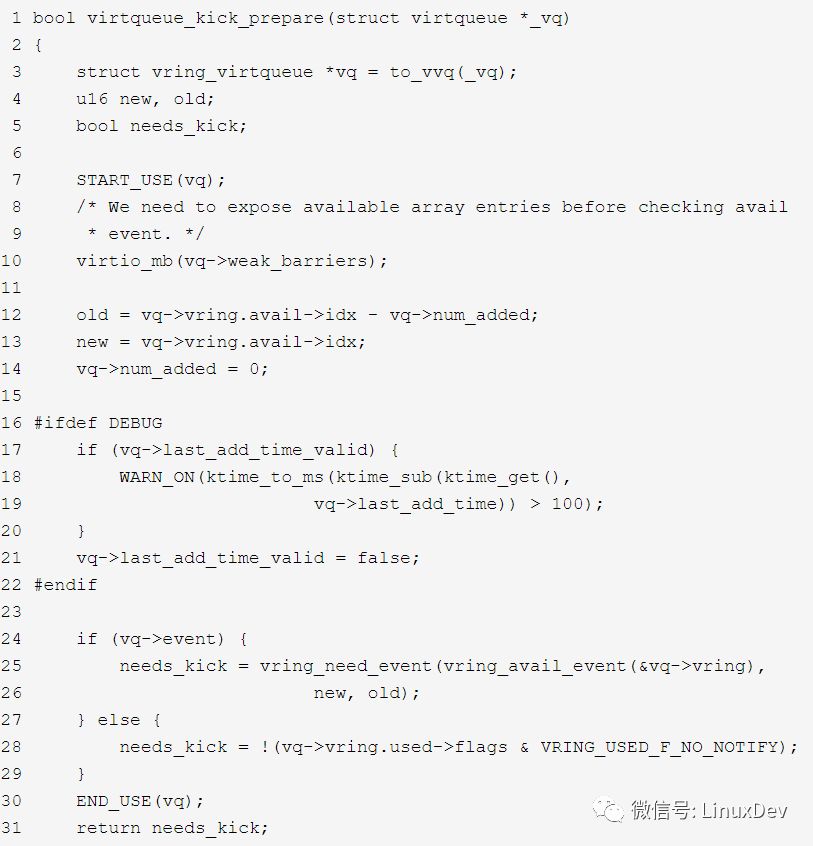

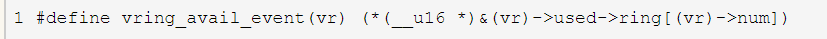

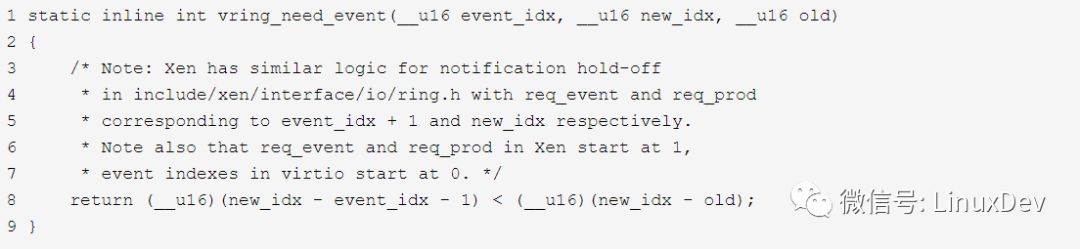

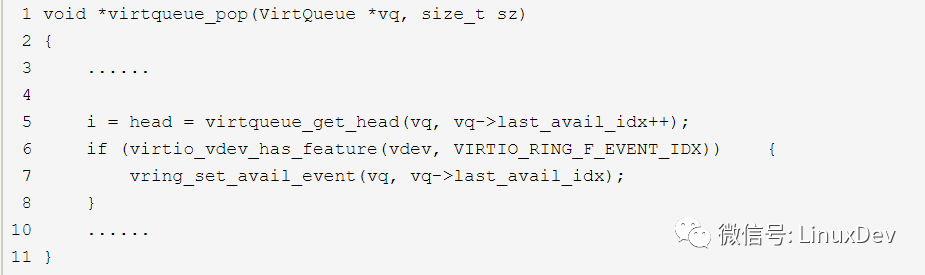

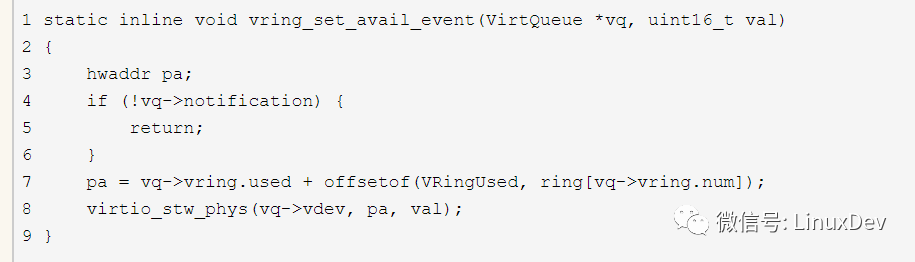

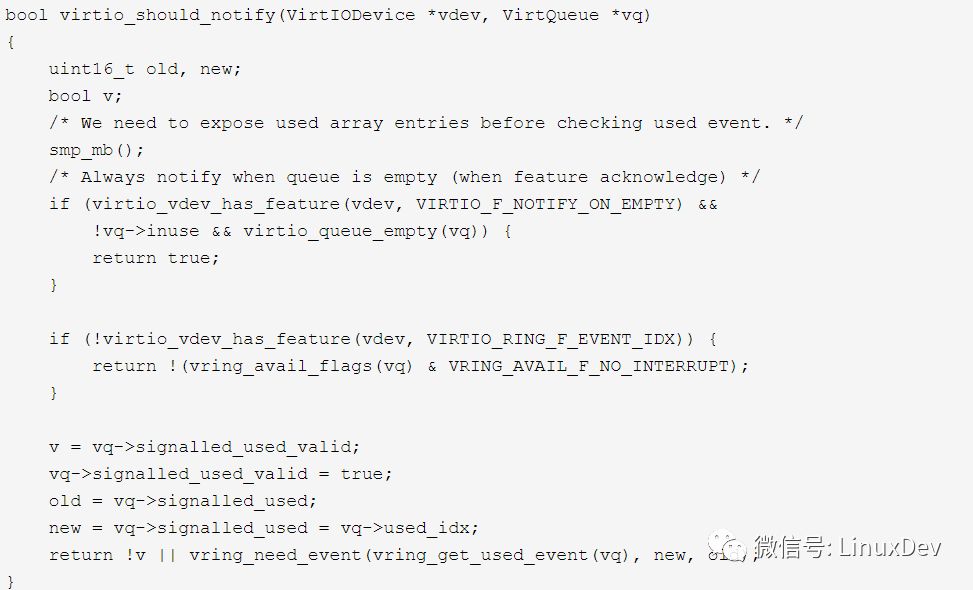

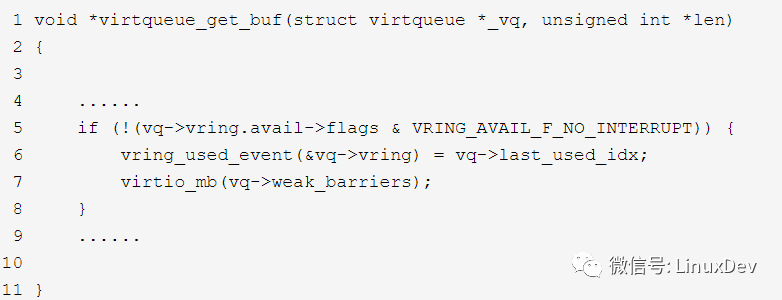

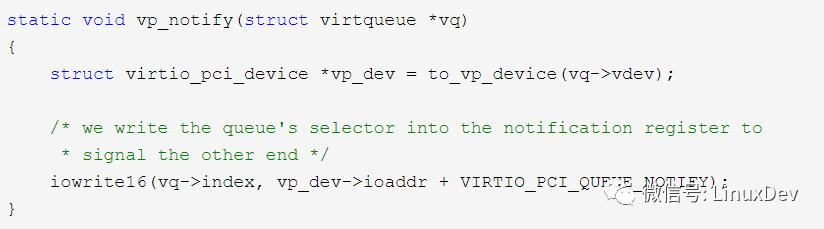

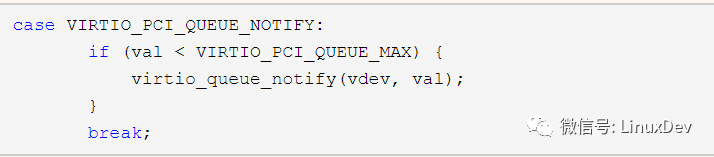

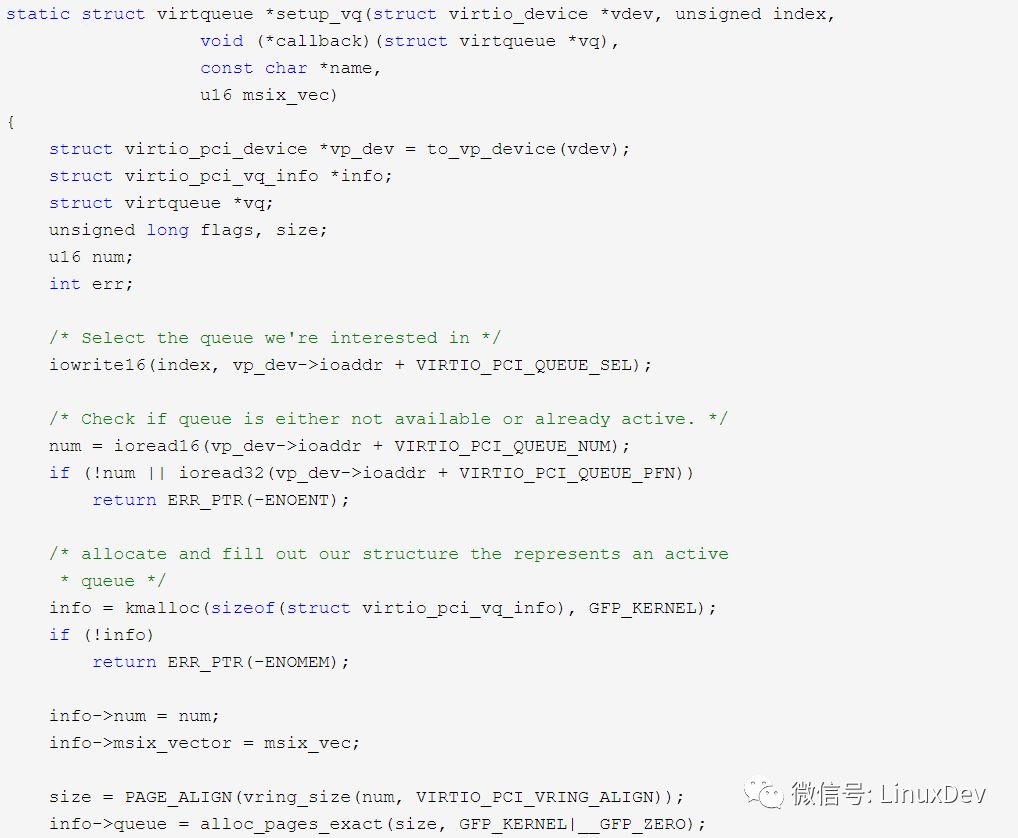

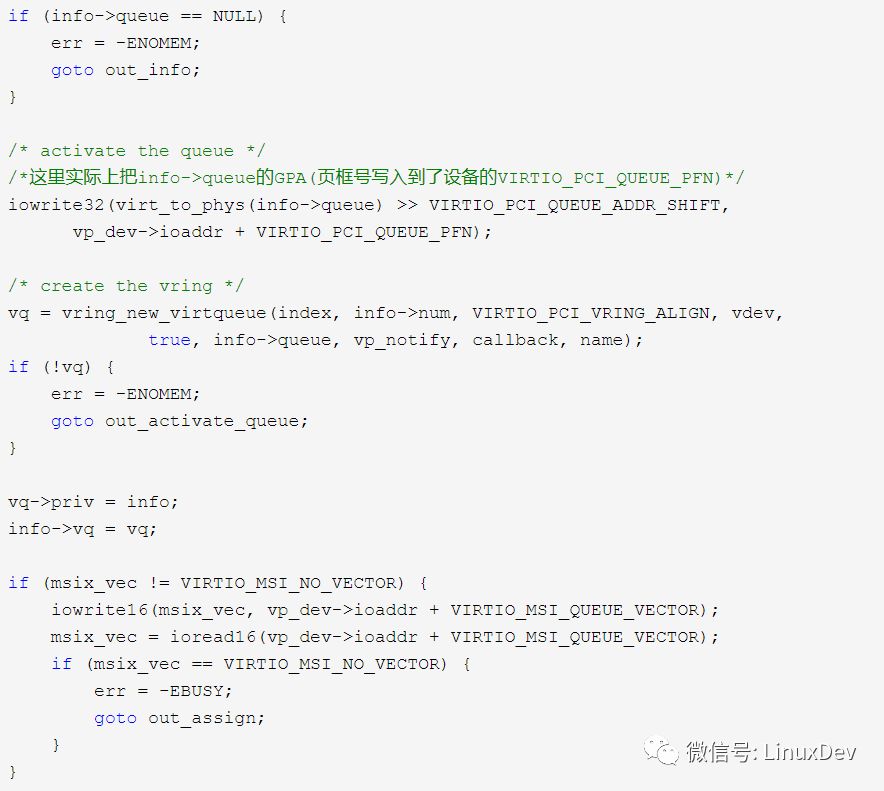

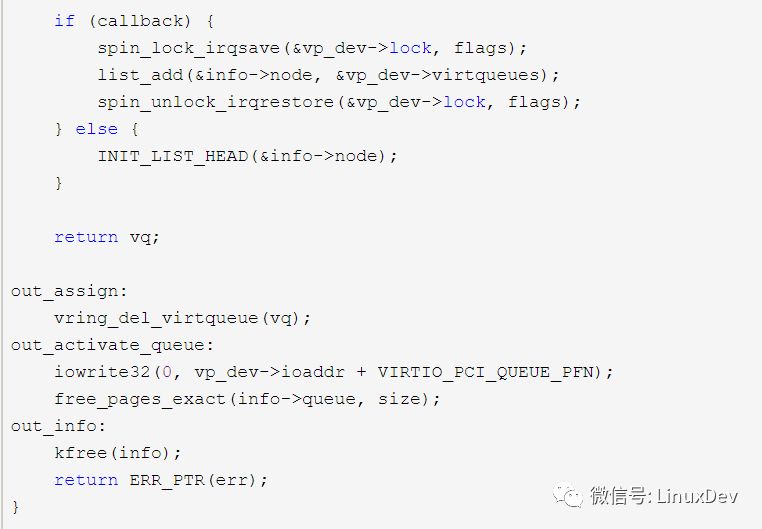

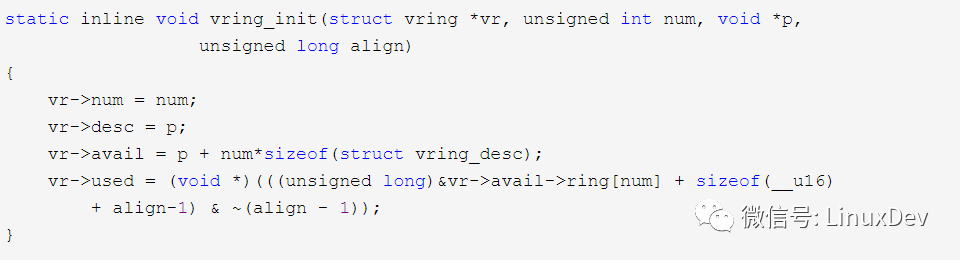

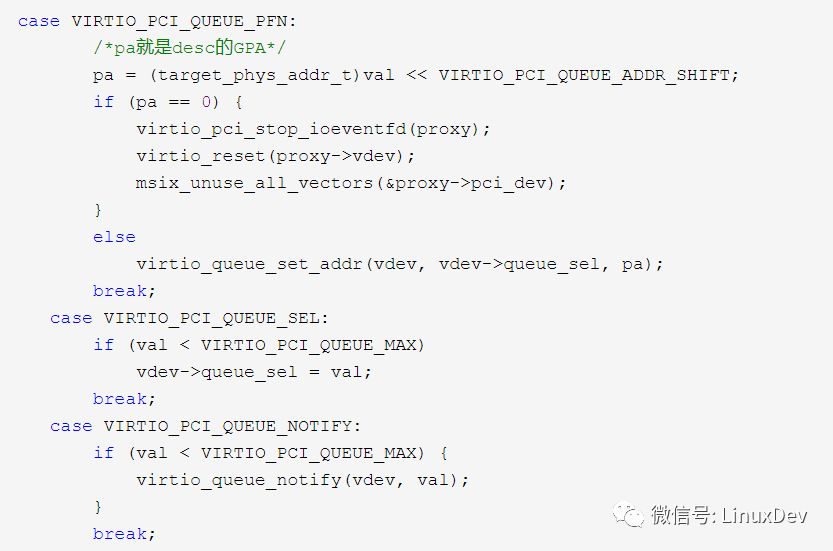

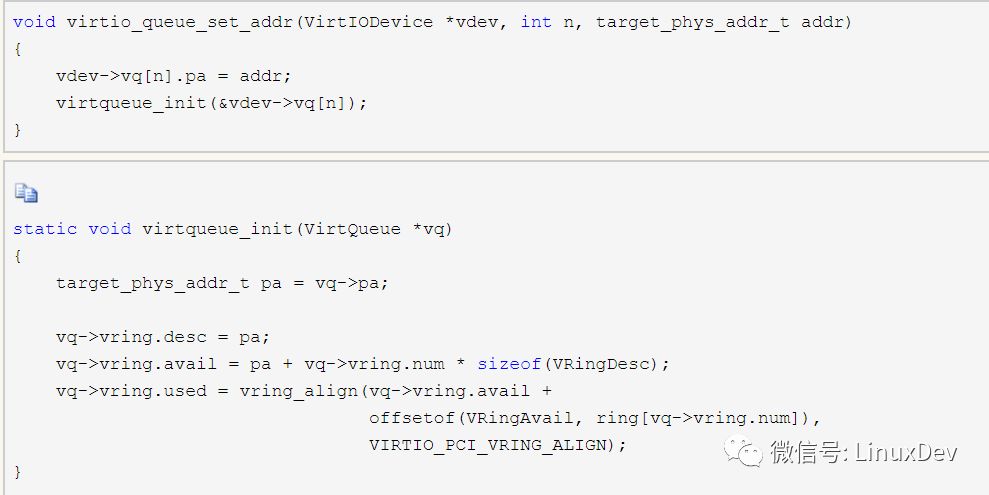

Originally, this was analyzed in the later stage of the front-end driver, but this part contains more content and analyzes the mechanism of the back-end notify front-end, so it is better to take a separate analysis! Take the network driver as an example. The network driver has two queues (ignoring the control queue): receiving queue and sending queue; each queue corresponds to a virtqueue, and the two queues do not affect each other. The front-end and back-end use of virtqueue is shown in the following figure: Here is a detailed description. When both queues require the client to fill the buffer, ReceiveQueue requires the client driver to fill the allocated empty buffer in advance, then record it to the availRing, and notify the backend at the right time when there is data on the external network When the packet arrives, the qemu backend obtains a buffer from the availableRing, then fills in the data, and records the buffer head index to the usedRing after the event. Finally, the client is notified at the appropriate time (injecting an interrupt to the client), and the client receives the signal. Knowing that a data packet arrives, you only need to get the index from the usedRing, and then take the i-th element of the data array. Because when the client fills the buffer, the pointer of the logical buffer is stored in the data array. And SendQueue also needs the client to fill, but here is when the client needs to send a data packet, the data packet is constructed into a logical buffer, and then filled into the send Queue, and the backend is notified at the right time, and the qemu backend receives it. The notification will know that a request has arrived in that queue, and if there is no other packet currently being processed, the packet will be processed. Specifically, the buffer head index is also taken out from the AvailRing, and then the buffer is obtained from the descriptor table. At this time, the data needs to be copied from the buffer, because the data packet is sent from the host, and then the usedRing is updated. Finally, the client must also be notified at the right time. Note that the client here also needs to get the index from the usedRing, but this is mainly used for delay notify, because the data packet is constructed by the client, and the buffer occupied by it cannot be reused, but it is constructed into a buffer every time there is a data packet. That's it. The above is the basic principle of using sendqueue and receive, but there is another point that I did not mention above, that is, the appropriate timing of notification, then when is the appropriate reality? ? There are two ways to control the notification of the front and back ends in virtIO. 1. The flags field 2. Event trigger 1. The flags field of vring_avail and vring_used controls the communication between the front and back ends. The flags in vring_used are used to notify the driver. When adding a buffer, there is no need to notify the backend. The flags in vring_avail are used to notify the qemu end that it does not need to interrupt the client when consuming a buffer. 2. Another mechanism is added to virtIO. The driver and qemu need to determine whether notification is required, that is, set a limit. When one end adds a buffer or the number of consumed buffers reaches a specified number, an event is triggered, and a notification occurs. Or interrupt. With this mechanism, the aforementioned flags are ignored. Here we take ReceiveQueue as an example to analyze the delay notify mechanism of the front and back ends. On the front driver side: When the client driver receives data through NAPI, it will call a function to add when the available buffer is insufficient, specifically try_fill_recv: As for which type of buffer is added, we don’t care here. At the end of the loop, the virtqueue_kick(rq->vq) function is called. At this time, the parameter is the virtqueue of the receiving queue. Next, the virtqueue_kick_prepare function is called, which determines whether the backend should be notified currently. First look at the code of the function: There are several variables involved, old is the avail.idx before add_sg, and new is the current avail.idx, and the other is vring_avail_event(&vq->vring), depending on the specific implementation: You can see that this is the value of the last item in the ring array in VRingUsed, which is set to the next index to be used in the corresponding queue before the backend driver pops an elem from the virtqueue, that is, last_avail_index. Look at the vring_need_event function: Comparison between front and back ends (__u16)(new_idx-event_idx-1) If it is the initialization state, that is, the virtqueue_pop function is currently executed for the first time, last_avail_idx=0, after ++ it becomes 1, and then set this value to the last item of the UsedRing.ring[] array: After the setting is successful, the processing after pop is executed. After the data is written, the virtio_notify(vdev, q->rx_vq) function of the backend is called. Before the function is executed, it is also necessary to determine whether notify is required. The specific function is virtio_should_notify The logic of this function is roughly similar to the overall judgment function of the front-end driver, but there are still some differences. First, if the queue is empty, that is, there is no buffer available, the front-end must be notified; Then determine whether to support such an event-triggered method, namely VIRTIO_RING_F_EVENT_IDX, if not, use the flags field to determine. If it does, it will be notified by event triggering. There are two conditions here: the first is v = vq->signalled_used_valid and vring_need_event(vring_get_used_event(vq), new, old) v = vq->signalled_used_valid is set to false at the time of initialization, which means that no notification has been made to the front end, and then every time virtio_should_notify will be set to true, and vq->signalled_used = vq->used_idx will be updated; so If it is the first attempt to notify the front-end, it will always succeed, otherwise it needs to be judged vring_need_event(vring_get_used_event(vq), new, old), this function is the same as the previous logic, as mentioned earlier, this is the first notification attempt , So it always succeeds. And vring_get_used_event(vq) is the value of the last item in the VRingAvail.ring[] array, which is set in the client driver In the next return to the linux driver, the buffer will be taken from the usedRing, and the used_event will be set every time a buffer is taken out. The code can be found in the virtqueue_get_buf function of virtio_ring.c. The value set is vq->last_used_idx, which records the processing position of the client. So far, basically a complete interaction has been completed, but because it is the first interaction, the delay mechanism of the front and back ends has not worked. The event_idx used in the judgment condition has been updated. If you say that you add 8 buffers for the first time, you will be notified. The back end, and the back end uses three buffers and the front end is notified for the first time. At this time, the back end writes data to the fourth buffer, last_avail_idx=4 (starting from 0), then used_event=4, and the front end finds that the available buffer is insufficient , Need to be added, then 5 are added this time, namely new=8+5=13, old=8, new-old=5, and at this time new-used_event-1=8, the condition is not met, so the front end The buffer added by the driver does not need to notify the backend. However, during this time, the backend processed the second data packet and used 3 buffers. Unfortunately, the front-end is still processing the second buffer, that is, last_used_idx=2, then used_event=2; for the back-end, new-old=3, new-used_event-1=3, the conditions are not met, so no notification is required. In this way, the delay notify mechanism has shown its effect. The author believes that this is actually a speed showdown in essence. To ensure fairness, even if one party is processing fast, it cannot send data to the other end arbitrarily. You can only send it after the other party has processed it, so that the sending party can rest. And the receiving party will not discard it because it is not handled in time, resulting in waste! Haha, there are no rules and no circles! Specific notification method: As mentioned earlier, a certain notification mechanism is required when the front end or the back end needs to notify the other end to complete an operation. What is this notify mechanism? There are two directions 1. guest->host As mentioned earlier, when the front end wants to notify the back end, it will call the virtqueue_kick function, and then call virtqueue_notify, which corresponds to the notify function in the virtqueue structure, which is initialized to vp_notify (in virtio_pci.c) when it is initialized. Take a look at the function achieve It can be seen that here is just the index number of vq written into the IO address space of the device, which is actually the VIRTIO_PCI_QUEUE_NOTIFY position in the PCI configuration space corresponding to the device. Performing an IO operation here will cause VM-exit, and then exit to KVM->qemu for processing. Look at the processing method of the back-end driver. In the qemu code, there is a function virtio_ioport_write in the virtio-pci.c file to handle the IO write operation of the front-end driver, see Here first judge whether the queue number is within the legal range, and then call the virtio_queue_notify function, and finally call virtio_queue_notify_vq. This function actually only calls the processing function handle_output bound in the VirtQueue structure. This function has different implementations according to different devices. For example, the network card has the realization of the network card, and the block device has the realization of the block device. Take the network card as an example to see which function is bound to when creating VirtQueue. In virtio_net_init in virtio-net, c, you can see that the receiving queue is bound to virtio_net_handle_rx, and the sending queue is bound to virtio_net_handle_tx_bh or virtio_net_handle_tx_timer. For block devices, it corresponds to the virtio_blk_handle_output function. 2. host->guest The host notifies the guest of course by injecting interrupts. The first call is virtio_notify, and then virtio_notify_vector is called and the interrupt vector is passed in as a parameter. The notify function associated with the device is called here, which is specifically implemented as the virtio_pci_notify function. The regular interrupt (non-MSI) will call qemu_set_irq, in the case of the 8259a interrupt controller, it will call kvm_pic_set_irq, and then when it comes to kvm_set_irq, it will interact with KVM through kvm_vm_ioctl. , The interface is KVM_IRQ_LINE, which informs KVM to inject interrupts to the guest. The kvm_vm_ioctl function in KVm will process this call, specifically by calling kvm_vm_ioctl_irq_line, and then calling the kvm_set_irq function for injection. See the interrupt virtualization part for the subsequent process. Shared memory As mentioned earlier, when the guest informs the host, the index of the queue is written into the VIRTIO_PCI_QUEUE_NOTIFY field of the configuration space, but how does one index find the specified queue, and when does the data arrive at the backend? This uses shared memory. What we know is that the front and back ends do pass data through shared memory, but how the address of the data is passed to the back end is a problem. This section mainly analyzes this problem. In order to facilitate understanding, we first explain its principle, and then combine the code to see the specific implementation. In fact, the front and back ends share a continuous memory area after initialization. Note that this is a physically continuous memory area (GPA), which is allocated when the client initializes the queue, so it is necessary to interact with the partner system. The structure of this memory area is shown in the figure below Friends who know about vring should be familiar with this structure. Yes, this is the structure managed by vring. In other words, the front and back ends directly share the vring. That is to say, for the same queue (such as the sending queue of a network card), a protocol has been formed between the front and back ends to exchange data address information through this memory area. After writing the address information of the data into the desc array, you only need to notify the other end, and the other end knows where to fetch the data. Of course, through the desc array. See other summary for the specific data transfer process. Therefore, in the initialization phase, after the front end allocates the memory area and initializes the front end vring, the information in the memory area is passed to the back end, and the back end also uses the information in this memory area to initialize the vring related to the queue. In this way, the vring is consistent on the front and back ends. The principle is like this, let's look at the specific initialization code: front end: virtnet_probe->init_vqs->virtnet_find_vqs->vi->vdev->config->find_vqs(vp_find_vqs)->vp_try_to_find_vqs->setup_vq, through the IO port and backend interaction in setup_vp to complete the protocol we mentioned earlier. Take a look at the function Pay attention to the negotiation steps, first mark the queue index of this operation through VIRTIO_PCI_QUEUE_SEL, because each queue has its own vring, that is, it needs its own shared memory area. Then check whether the queue is available, this is through VIRTIO_PCI_QUEUE_NUM, if the returned result is 0, it means that no queue is available, and an error is returned. Then check whether it has been activated through VIRTIO_PCI_QUEUE_PFN, if it is already activated, an error is also returned. After these checks are passed, it can be initialized. Specifically, first allocate an intermediate structure virtio_pci_vq_info. This is not the point. Later, alloc_pages_exact is used to allocate continuous physical memory not less than size to the partner system. We will talk about the size problem later, and then take this physical The page frame number (GPA>>VIRTIO_PCI_QUEUE_ADDR_SHIFT) is written into VIRTIO_PCI_QUEUE_PFN, so that the back end will get the information of this memory area. Then we first look at what the front end does with this memory area? Look at the following vring_new_virtqueue function, which calls vring_init to initialize vring This function just reflects our previous structure diagram. In this way, the front-end vring is initialized. When the queue is filled with data, the information is filled according to this vring. Backend (qemu side) The main operations are in virtio_ioport_write, we only focus on three cases It can be seen that in VIRTIO_PCI_QUEUE_SEL, only the queue_sel in the device is marked to indicate the queue index of the current operation. When passing the address through VIRTIO_PCI_QUEUE_PFN, call virtio_queue_set_addr to set the vring of the back-end related queue. The implementation of this function is relatively simple Seeing that there is something familiar here, yes, this function is very similar to the function of the front-end initialization vring, so the vring of the front and back ends are synchronized... When the guest informs the backend, through the VIRTIO_PCI_QUEUE_NOTIFY interface, the function calls virtio_queue_notify_vq and then vq->handle_output... In this way, the backend is notified to proceed! postscript: At this point, the virtIO part has been analyzed. During the analysis, I really felt the lack of knowledge. During the analysis, I asked the developers for help many times and received serious responses. I would like to thank these excellent developers. Sometimes when you look at the kernel code, you feel that the engineer and the hardware are fighting. From the engineer's point of view, you need to do everything you can to extract the performance of the hardware. It is as large as the optimization of the algorithm, and as small as the probability of analyzing the execution flow of the program, so as to optimize the compilation. From the perspective of hardware, if you don't handle it well, I won't work for you. And from this aspect, the engineer is naturally victorious, and is still making every effort to move closer to another realm of victory, that is, conquering the hardware! Haha, but everyone knows that this is a battle with no victory or defeat. Engineers are naturally good. However, because of the internal competition among engineers, the battle will never end! ! Alas, nonsense, friends, see you in the next article! Tinned Copper Wire,Tinned Copper,Tin Coated Copper Wire,Tin Plated Copper Wire Sowell Electric CO., LTD. , https://www.sowellsolar.com