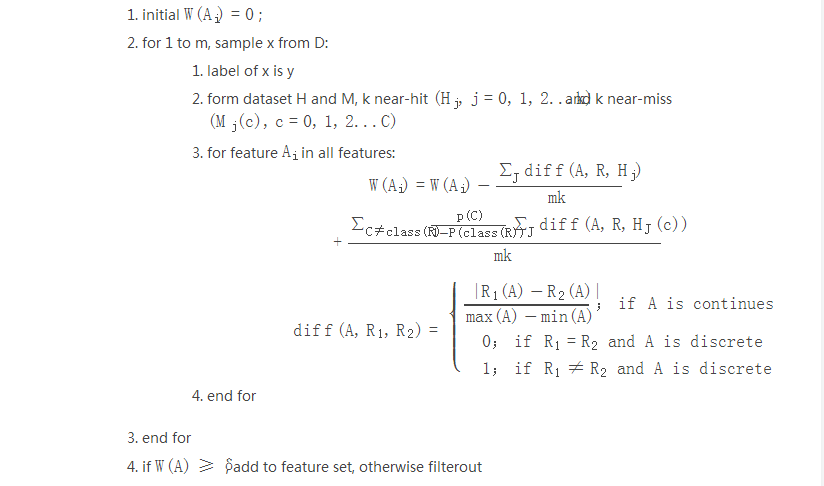

The concept of high-dimensional data is not difficult. Simply speaking, it means multidimensional data. Usually we often touch one-dimensional data or two-dimensional data that can be written in the form of a table. High-dimensional data can also be analogized. However, when the dimension is high, the visual representation is difficult. At present, high-dimensional data mining is the focus of research. High-dimensional data mining is based on a high-dimensional data mining. The main difference between it and traditional data mining lies in its high dimension. At present, high-dimensional data mining has become the focus and difficulty of data mining. As technology advances, data collection becomes easier, resulting in larger and more complex databases, such as various types of trade transaction data, Web documents, gene expression data, document word frequency data, User rating data, WEB usage data, and multimedia data, etc., their dimensions (attributes) can usually reach hundreds of dimensions or even higher. Due to the universality of high-dimensional data, the research on high-dimensional data mining has very important significance. However, due to the impact of “dimensional disastersâ€, high-dimensional data mining has become extremely difficult, and some special methods must be used for processing. As the data dimension increases, the performance of high-dimensional index structures declines rapidly. In low-dimensional space, we often use Euclidean distance as the measure of similarity between data, but in many cases in high-dimensional space, this similarity The concept of sex no longer exists, which brings a severe test to high-dimensional data mining. On the one hand, the performance of data mining algorithms based on index structure is degraded. On the other hand, many mining methods based on full-space distance functions will also Invalid. The solution can be as follows: the data can be reduced from high-dimensional to low-dimensional by dimensionality reduction, and then processed by low-dimensional data processing; the algorithm efficiency can be reduced by designing a more efficient index structure. Incremental algorithms and parallel algorithms are used to improve the performance of the algorithm; the problem of failure is re-defined to make it new. High-dimensional data mining is based on a high-dimensional data mining. The main difference between it and traditional data mining lies in its high dimension. At present, high-dimensional data mining has become the focus and difficulty of data mining. As technology advances, data collection becomes easier, resulting in larger and more complex databases, such as various types of trade transaction data, Web documents, gene expression data, document word frequency data, User rating data, WEB usage data, and multimedia data, etc., their dimensions (attributes) can usually reach hundreds of dimensions or even higher. Due to the universality of high-dimensional data, the research on high-dimensional data mining has very important significance. However, due to the impact of “dimensional disastersâ€, high-dimensional data mining has become extremely difficult, and some special methods must be used for processing. As the data dimension increases, the performance of high-dimensional index structures declines rapidly. In low-dimensional space, we often use Euclidean distance as the measure of similarity between data, but in many cases in high-dimensional space, this similarity The concept of sex no longer exists, which brings a severe test to high-dimensional data mining. On the one hand, the performance of data mining algorithms based on index structure is degraded. On the other hand, many mining methods based on full-space distance functions will also Invalid. The solution can be as follows: the data can be reduced from high-dimensional to low-dimensional by dimensionality reduction, and then processed by low-dimensional data processing; the algorithm efficiency can be reduced by designing a more efficient index structure. Incremental algorithms and parallel algorithms are used to improve the performance of the algorithm; the problem of failure is re-defined to make it new. PCA Unsupervised Using the covariance matrix to find the projection function ω so that the maximum dispersion (variance) after projecting into a low-dimensional space uses the Lagrangian solution inequality Selecting feature vectors based on the obtained eigenvalues General feature vector set with information rate above 90% For data where N is much larger than D, use SVD (singular value) to solve First perform a self-property reduction and then train LDA Supervisory Seeking a projection space that minimizes intraclass variance and maximizes interclass differences SOM Clustering method - Take differences to update neighbors in the surrounding range MDS Unsupervised dimensionality reduction Focus on the relative distance (relationship) of data, which is conducive to dimensionality reduction and visualization of streaming data. But the overall structure of the original data is seriously damaged. Three basic steps: Calculating stress Update projection function Check disparity ReliefF ReliefF handles multiple classifications, and Relief can only handle two classifications. Used to weight features and filter them by weights Algorithm input: data set D, including class c samples, subset sample number m, weight threshold δ, kNN coefficient k algorithm steps: LLE and ISOMAP Some summary The basic idea of ​​high latitude data modeling is to find the function f(x): f(x) projects data into a low-dimensional space Certain features of the data in a low dimensional space can be maintained Method selection: Focus on reducing dimensions and improving data analyzability using PCA, using SVD for large amounts of data Focus on inter-class and intra-class distinctions, use LDA Focus on the interrelationship of data, and the data is inseparable, then use MDS For manifolds, use LLE and IOSMAP Pvc Trunking,Pvc Cable Trunking,Plastic Cable Trunking,Pvc Electrical Trunking FOSHAN SHUNDE LANGLI HARDWARE ELECTRICAL CO.LTD , https://www.langliplastic.com