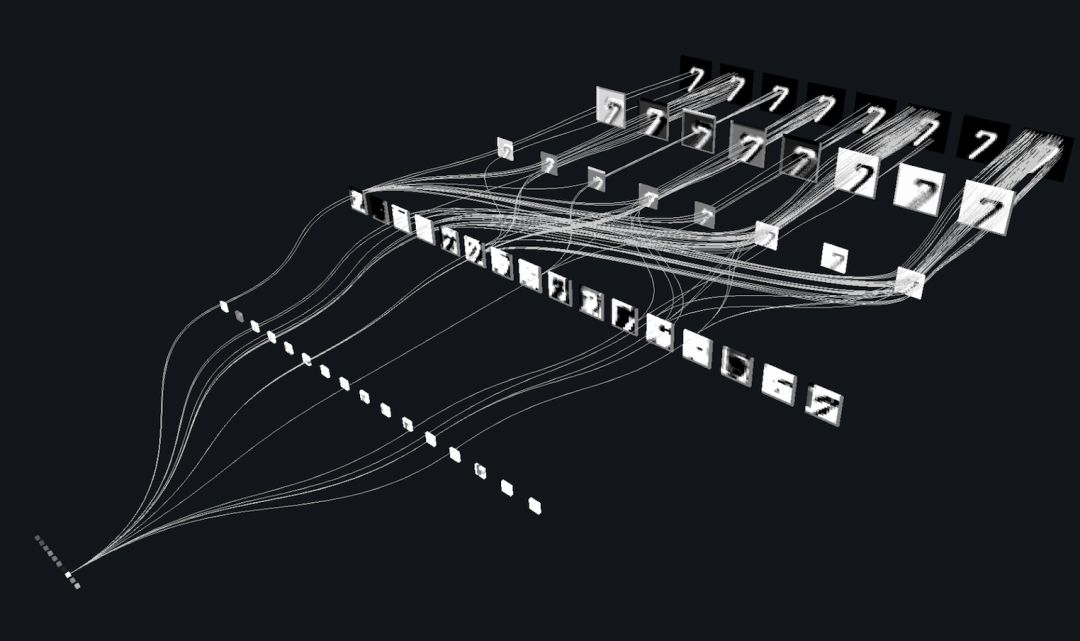

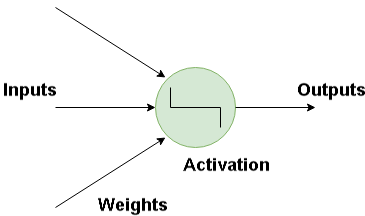

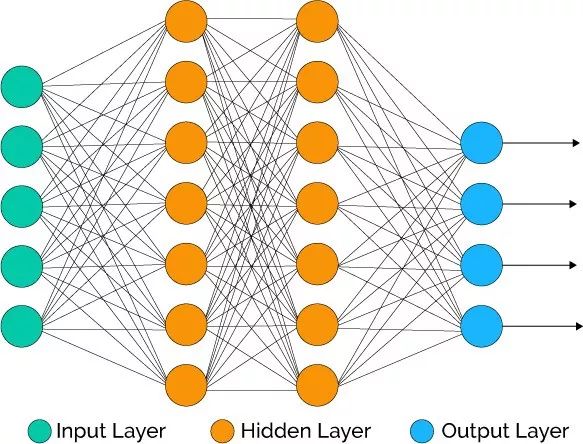

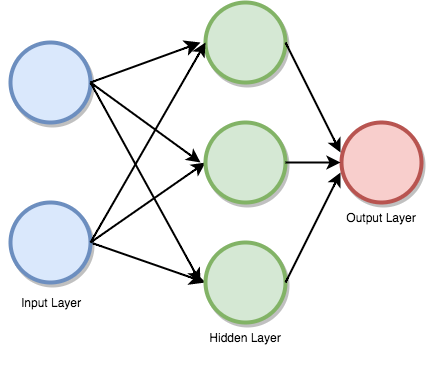

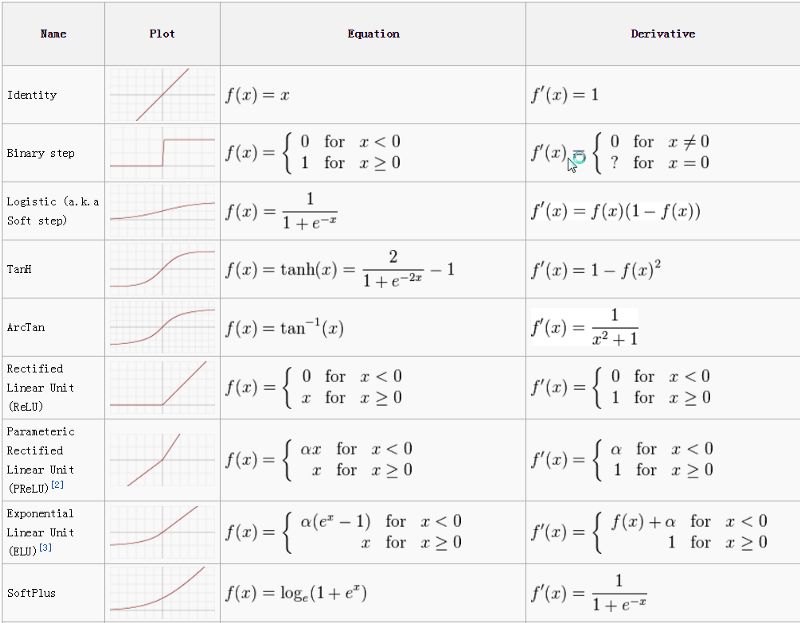

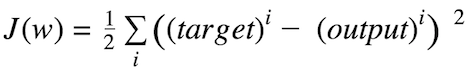

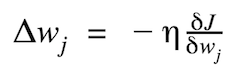

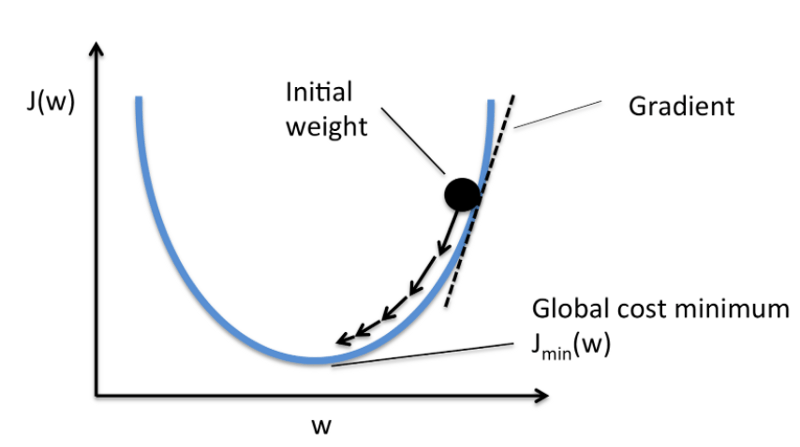

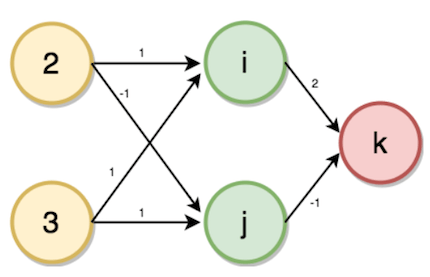

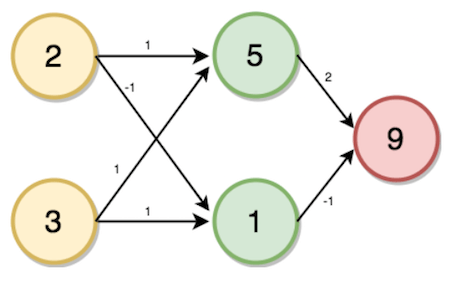

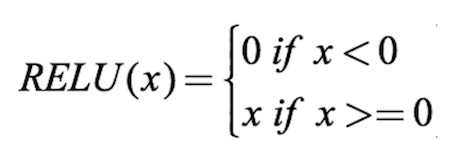

Python Software Foundation member (Contibuting Member) Vihar Kurama succinctly introduced the basic concepts of deep learning and provided an example of a deep learning network based on Keras. The main idea behind deep learning is that artificial intelligence should learn from the human brain. This view brought about the rise of the term "neural network." The brain contains billions of neurons and there are tens of thousands of connections between these neurons. Deep learning algorithms reproduce the brain in many cases. Both the brain and deep learning models involve a large number of computational units (neurons) that are not intelligent themselves, but become intelligent when they interact with each other. I think people need to understand that deep learning makes a lot of things better behind the scenes. Google search and image search already use deep learning technology; it allows you to search for images using words such as "hugs." - Geoffrey Hinton Neurons The basic components of a neural network are artificial neurons. Artificial neurons simulate human brain neurons. They are simple and powerful computing units that use an activation function to produce output signals based on weighted input signals. Neurons spread across various layers of the neural network. How does an artificial neural network work? Deep learning includes artificial neural networks that model neural networks in the human brain. When data flows through this artificial network, one aspect of each layer that processes data, filters out discrete values, identifies similar entities, and produces the final output. Input Layer This layer contains neurons that only accept input and pass it to other layers. The number of neurons in the input layer should equal the number of attributes or characteristics of the data set. Output Layer The output layer outputs the predicted characteristics. Basically, it depends on the specific model category built. Hidden layer Hidden layer is a hidden layer between the input layer and the output layer. In the process of training the network, the weights of the hidden layers are updated to enhance their forecasting capabilities. Neuron weights Weight refers to the strength of the connection between the two neurons. If you are familiar with linear regression, you can think of the input weight as a coefficient in the regression formula. Weights are usually initialized with smaller random values, such as values ​​between 0 and 1. Feedforward depth network Feedforward supervision neural network is one of the earliest and most successful neural network models. They are also sometimes referred to as Multi-Layer Perceptrons (MLPs), or simply directly as neural networks. The input flows through the entire network along the active neuron until an output value is generated. This is called the forward pass of the network. Activation function The activation function maps the input weighted sum to the neuron's output. It is called the activation function because it controls which neurons are activated and the strength of the output signal. There are many activation functions, the most common of which are ReLU, tanh, and SoftPlus. Picture source: ml-cheatsheet Backward propagation Comparing the predicted and expected output of the network, the error is calculated by a function. Then propagate the error backward in the entire network, one layer at a time, and the weight is updated according to its contribution to the error. This is called the Back-Propagation algorithm. Repeat this process on all samples of the training set. Updating the network for the entire training data set is called epoch. The network may need to train dozens, hundreds, thousands of epochs. Cost function and gradient descent The cost function measures how good the neural network is at a given training input and expected output. It may also depend on parameters such as weights or offsets. The cost function is usually a scalar, not a vector, because it evaluates the overall performance of the network. Using the Gradient Descent optimization algorithm, weights are updated incrementally after each epoch. For example, Sum of Squared Errors (SSE) is a commonly used cost function. The magnitude and direction of weight updates are calculated by calculating the cost gradient: η is learning rate Below is a schematic diagram of the gradient of the cost function with a single coefficient: Multilayer Perceptron (Forward propagation) Multilayer Sensors contain multiple layers of neurons, often interconnected in feed-forward fashion. Each neuron in each layer is directly connected to the neurons in the next layer. In many applications, multi-layer perceptrons use sigmoid or ReLU activation functions. Let us now look at an example. Given the account and family members as inputs, predict the number of transactions. First we need to create a multilayer perceptron or feed forward neural network. Our multi-layer perceptron will have an input layer, a hidden layer, and an output layer, where the number of family members is 2, and the number of accounts is 3, as shown in the following figure: The values ​​of the hidden layer (i, j) and output layer (k) will be calculated using the following forward propagation process: i = (2 * 1) + (3 * 1) = 5 j = (2 * -1) + (3 * 1) = 1 k = (5 * 2) + (1 * -1) = 9 The above calculation process does not involve the activation function. In fact, in order to make full use of the prediction ability of the neural network, we also need to use an activation function to introduce nonlinearity. For example, use the ReLU activation function: This time, our input is [3, 4] with weights of [2, 4], [4, -5], [2, 7]. i = (3 * 2) + (4 * 4) = 22 i = relu(22) = 22 j = (3 * 4) + (4 * -5) = -8 j = relu(-8) = 0 k = (22 * 2) + (0 * 7) = 44 k = relu(44) = 44 Developing neural network based on Keras About Keras Keras is a high-level neural network API based on Python that runs on TensorFlow, CNTK, Theano. (Translator's Note: Theano has stopped maintaining.) Run pip to install keras by running the following command: Sudo pip install keras Steps to Implement a Deep Learning Program in Keras Download Data Define the model Compilation model Training model Assessment model Integration Developing Keras model Keras uses the Dense class to describe the full connection layer. We can specify the number of neurons in the layer, the initialization method, and the activation function by using the corresponding parameters. After defining the model, we can compile the model. The compilation process will call the backend framework, such as TensorFlow. After that we will run the model on the data. We train the model on the data by calling the model's fit() method. From keras.models importSequential From keras.layers importDense Import numpy # Initialize a random number Seed = 7 Numpy.random.seed(seed) # Loading Data Sets (PIMA Diabetes Dataset) Dataset = numpy.loadtxt('datasets/pima-indians-diabetes.csv', delimiter=",") X = dataset[:, 0:8] Y = dataset[:, 8] # Define the model Model = Sequential() Model.add(Dense(16, input_dim=8, init='uniform', activation='relu')) Model.add(Dense(8, init='uniform', activation='relu')) Model.add(Dense(1, init='uniform', activation='sigmoid')) # Compilation model Model.compile(loss='binary_crossentropy', Optimizer='adam', metrics=['accuracy']) # Fit the model Model.fit(X, Y, nb_epoch=150, batch_size=10) # Evaluation Scores = model.evaluate(X, Y) Print("%s: %.2f%%" % (model.metrics_names[1], scores[1] * 100)) Output: $python keras_pima.py 768/768 [==============================] - 0s - loss: 0.6776 - acc: 0.6510 Epoch2/150 768/768 [==============================] - 0s - loss: 0.6535 - acc: 0.6510 Epoch3/150 768/768 [==============================] - 0s - loss: 0.6378 - acc: 0.6510 . . . . . Epoch149/150 768/768 [==============================] - 0s - loss: 0.4666 - acc: 0.7786 Epoch150/150 768/768 [==============================] - 0s - loss: 0.4634 - acc: 0.773432/768 [>.............................] - ETA: 0sacc: 77.73% We trained 150 epochs and finally achieved 77.73% accuracy.

Accessores for electric components , like connector caps , rail cable clips , cable gland , pin remove tools , crimping tools.

Pg7 Cable Gland,Emc Cable Gland,M23 Connector Cap,Rail Cable Clips Kunshan SVL Electric Co.,Ltd , https://www.svlelectric.com