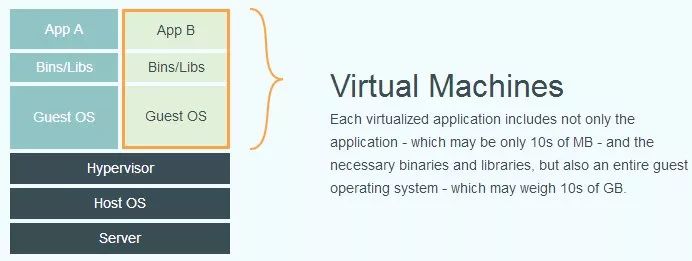

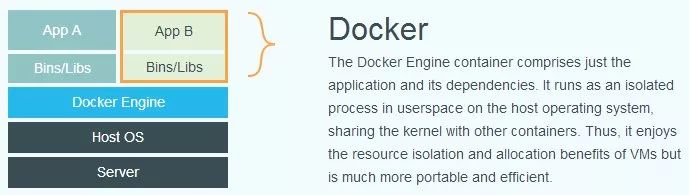

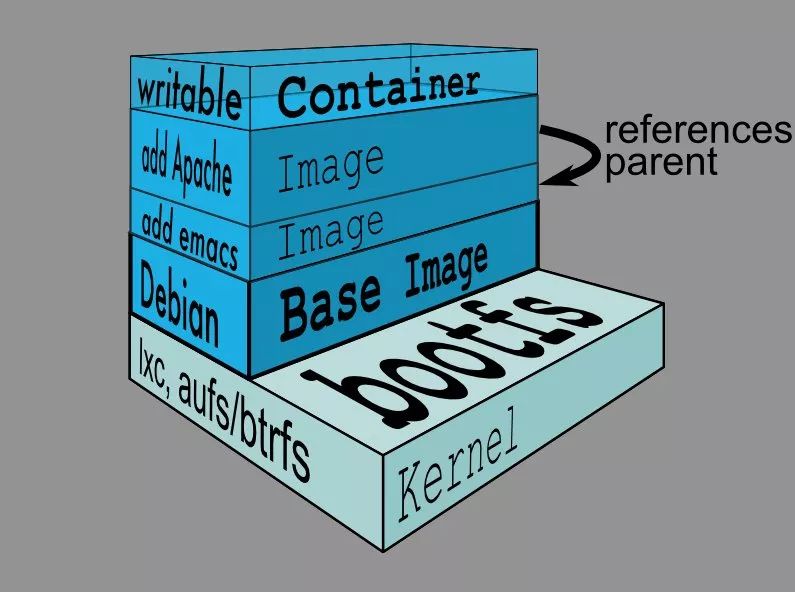

Generally speaking, we only need to open a static file server for the SPA project, but it is not the same for traditional projects. A project will depend on many server programs. Previously our development model was to deploy the development environment on a development machine, and everyone was developing on this development machine using Samba connectivity. Old-fashioned development is not a problem, but because the front-end has introduced a compilation process, it has increased the behavior of Webpack packaging and building. When many people develop together, they often die because the memory is full and the packaging fails. After painstakingly trying to solve this problem, I decided to introduce Docker to our development environment and solve this problem by localizing the development environment. Why Docker? Ordinary Web services generally rely on many programs, such as PHP, MySQL, Redis, Node, and so on. Under normal circumstances, we will manually install these programs to configure the environment required by the service, which will bring about several problems: Different services in the same environment rely on different versions of the same software. Classics such as python2 and python3, and local Macs are PHP7, but services can only support PHP5.6. Different services in the same environment may modify the same file. For example, the configuration of the system, the configuration of Nginx, and so on, all have an impact. Deploying the same service on multiple machines requires manual operations, resulting in a lot of wasted labor costs. It is too much trouble to install software one by one, so everyone just wants to simply package the entire system and put it on the machine. So there is virtual machine technology. Doing so can ensure the stability of the system environment and repetitive manual operations can be avoided, but it will also bring about some problems: The packaged virtual machine file contains the system image so it is particularly large. The packaged virtual machine file contains the system image. Therefore, the service needs to wait for the system to start successfully before it starts. The packaging process cannot be automated. For the third point, Vagrant later used the vagrantfile to script-configure the image to be automated, but the other two points have not been resolved. So later came the process-level virtualization technology on the system - Docker. It brings us the following advantages: No need to pack the system into the mirror so the volume is very small Does not need to wait for the virtual system to start so it starts with a fast resource footprint Sandbox mechanism guarantees environmental isolation between different services Dockerfile mirroring mechanism automates image packaging deployment Docker hub provides a mirroring platform for sharing images The following are the specific differences between VM and Docker technologies. You can see that the VM is packaged with the Guest OS into the image, and Docker is directly based on the host system virtualization. Docker basics Docker supports Windows/Linux/Mac/AWS/Azure installations on various platforms, where Windows requires Win10+ and Mac requires EI Captain+. Docker is a C/S architecture service. Docker commands need to be enabled after docker is installed. Docker mainly has four basic concepts: Dockerfile, Image, Container, and Repository. With the Dockerfile we can generate a Docker Image. The images you create yourself can be uploaded to the Docker hub platform, or you can pull the images we need from the platform. When the image is pulled locally, we can instantiate the image to form a Container. A simple image startup command is: $ docker run [Organization Name]/<Mirror Name>: [Mirror Label]` In addition to the mirror name, the others are optional parameters. The organization name defaults to library. If the image tag is not filled, the default is latest. For example, the classic process to start a Hello World image is as follows: It can be seen that when I instantiate the hello-world image, docker finds that no local image will go to the remote end of the Docker hub to pull the image. As I just said, the default is the latest label. Pull will instantiate the execution of the entrance command. In addition to using the Docker hub to find the image we need, we can also use the docker search command to find it. A 16-year article 3 shows that the total number of image packages on the Docker hub has exceeded 400,000, and has grown at a rate of 4-5k per week. Let's take a look at how to run an Nginx container instance: $ docker run -d --rm -p 8080:80 -v "$PWD/workspace":/var/ -v "$PWD/hello.world.conf":/etc/nginx/conf.d/hello.world.conf Nginx You can start an instance using the docker run command, where -p maps the local 8080 port to port 80 in the mirrored instance, and -v maps the local $PWD/workspace folder to the mirrored instance. Var/ folder, the same behind. Finally specifying the image name will complete the startup of the Nginx instance. At this point visit http://hello.world:8080 to see the effect. Note: Do not store content in the container instance. When the instance is destroyed, all the content in the instance will be destroyed. The next time you start it, it will be a brand new instance and the content will not be saved. If you need storage services, you need to use mount volumes or external storage services. Dockerfile Dockerfile is a more important concept of Docker. It is the core of Docker's creation of images, and its appearance gives Docker two major advantages: Text-based image generation operation for easy version management and automated deployment Each command corresponds to one layer of mirroring. After detailed operations, it is guaranteed that it can be incrementally updated. Multiplexed mirror blocks are used to reduce the image volume. Some of the rules for writing Dockerfile are as follows: Use # to annotate The FROM directive tells Docker which image to use as a basis Instructions that begin with RUN are run during creation, such as installing a software package COPY instruction copies files into the image WORKDIR Specifies the working directory CMD/ENTRYPOINT container startup command Both RUN and CMD/ENTRYPOINT are execution commands. The difference is that RUN is executed during the mirroring process. CMD/ENTRYPOINT is executed when the mirroring instance is generated. The difference is similar to the normal code of C/C++ language header files. . And the latter can only exist in one Dockerfile file. The difference between CMD/ENTRYPOINT is not only in terms of writing, but also in the case of adding CMD parameters after the docker run command (CMD will be overwritten). It is generally advisable to use ENTRYPOINT. The Dockerfile for a simple Node command line script is as follows: FROM mhart/alpine-node:8.9.3 LABEL maintainer="lizheming <>" Org.label-schema.name="Drone Wechat Notification" Org.label-schema.vendor="lizheming" Org.label-schema.schema-version="1.1.0" WORKDIR /wechat COPY package.json /wechat/package.json RUN npm install --production --registry=https://registry.npm.taobao.org COPY index.js /wechat/index.js ENTRYPOINT [ "node", "/wechat/index.js" ] Here I think that the dependency is relatively fixed and there is no frequent code modification, so it is advanced. Finally, the more stable changes are ensured for the upper layer, ensuring that Layers packaged at each layer can be reused as much as possible without increasing the size of the image. Finally, we can use the following command to complete the construction of a Docker image: $ docker build lizheming/drone-wechat:latest The parameters are the same as docker run. After the build is complete, you can enjoy the push to the Docker hub. Docker Compose Above we said how to start a service, but we all understand that a complete project is certainly not dependent on a service, and the Docker image ENTRYPOINT can only set one, so we do use the docker run command to manually create N container instances ? To solve this problem, Docker Compose appeared instantaneously. Docker Compose is a container orchestration program that uses a YAML configuration to manage the containers you need to start, eliminating the need to execute docker run commands multiple times. Docker Compose was developed using Python. Its installation is very simple, just pip install docker-compose. Use the up and stop commands, respectively, after the installation is complete to start and stop the service. A simple docker-compose.yaml configuration file is probably as follows: Version: "2" Services: Nginx: Depends_on: - "php" Image: "nginx:latest" Volumes: - "$PWD/src/docker/conf:/etc/nginx/conf.d" - "$PWD:/home/q/system/m_look_360_cn" Ports: - "8082:80" Container_name: "m.look.360.cn-nginx" Php: Image: "lizheming/php-fpm-yaf" Volumes: - "$PWD:/home/q/system/m_look_360_cn" Container_name: "m.look.360.cn-php" Another advantage of Docker Compose is that it can help us deal with the container's dependencies. In each container, the IP of the container and the name of the service are bound using hosts, so that we can directly use the service name in the container. Into the corresponding container. For example, the php:9000 in the following Nginx configuration uses this principle. Server { Listen 80; Server_name dev.m.look.360.cn; Charset utf-8; Root /home/q/system/m_look_360_cn/public; Index index.html index.htm index.php; Error_page 500 502 503 504 /50x.html; Location = /50x.html { Root html; } # pass the PHP scripts to FastCGI server listening on 127.0.0.1:9000 # Location ~ .php$ { Fastcgi_pass php:9000; #fastcgi_pass unix:/tmp/fcgi.sock; Fastcgi_index index.php; } } Docker related Based on the Docker container virtualization technology, in addition to solving the deployment environment above, there are some other advantages, such as: Docker-based CI Continuous Integration and CD Continuous Payment Based on Kubernetes, Docker Swarm's elastic expansion and capacity reduction CI/CD is very important for today's agile development. Automated tasks help us save a lot of unnecessary development time. For more information, please refer to my article "Clone Based on Docker CI Tools"4. The elastic expansion and shrinking brought by k8s and Docker Swarm made the business not a headache for traffic problems. By monitoring the alarm settings, when the peak value occurs, the capacity expansion will be automatically expanded, and when there is a trough, the excess containers will be automatically removed to save costs, and at the same time, the extra resources will be used for other services. 8 Inch Tablet

Today let`s talk about 8 inch tablet with android os or windows os. There are many 8 inch tablets on sale you can see at this store. 8 inch Android Tablet is absolutely the No. 1 choice if you are searching a student online learning project. 8 inch windows tablet is more welcome when clients are looking tablet for business application. The most welcome parameters level is 2 32Gb with 4GB lite, 4000mAh, android 11 only around 60usd, price will be much competitive if can take more than 1000pcs. 7 Inch Tablet wifi only, android tablet 10 inch, Amazon 8 inch tablet is also alternative here. Except tablet, Education Laptop, Gaming Laptop, 1650 graphics card laptop, Mini PC and All In One PC are the other important series.

Therefore, you just need to share the configuration, application scenarios, quantity, delivery time, and other special requirements, then will try our best to support you.

Any other thing in China we can do, you can also feel free to contact us.

You will find that cooperating with us is the best choice, not only save time and energy, but also save much cost.

8 Inch Tablet,8 Inch Android Tablet,Amazon 8 Inch Tablet,8 Inch Tablets On Sale,8 Inch Windows Tablet Henan Shuyi Electronics Co., Ltd. , https://www.shuyioemelectronics.com