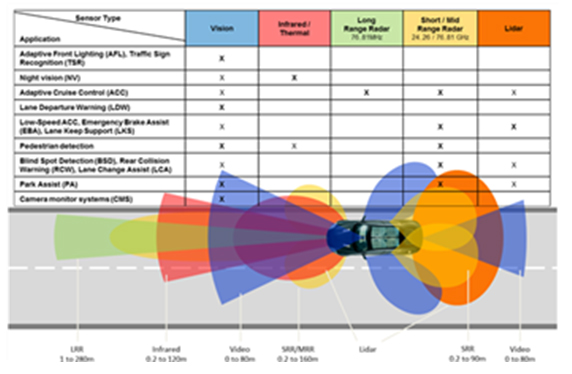

With the advent of the Advanced Driver Assistance System (ADAS) system and the drive and development of driverless driving, cars need to be well versed in the surrounding environment. The driver is able to perceive the environment around us while making judgments and reacting quickly in different situations. However, no one is perfect, we will be tired and distracted, and we will make mistakes because of mistakes. To improve safety, automakers are designing ADAS solutions for passenger cars. Cars rely on a variety of sensors This article refers to the address: http:// To sense the complex surroundings in different situations. This data is then transmitted to high-precision processors such as the TITDA2x and finally used for automatic emergency braking (AEB), lane departure warning (LDW) and blind spot monitoring. Currently, sensors for ambient sensing There are mainly the following. Passive sensor - Mainly used to sense rays reflected or emitted from an object. Visual image sensor - all imagers run in the visible spectrum Infrared image sensor - Run outside the visible spectrum. It can be near infrared or thermal infrared (far infrared). Passive sensor Will be affected by the environment - different moments, weather, etc. For example, a visual sensor can be affected by the amount of visible light at different times of the day. Active sensor - By emitting a ray and measuring the response of the reflected signal. The advantage is that measurements can be obtained at any time, regardless of time or season. Radar - by transmitting radio waves, determining the distance, direction and speed of the object based on the radio waves reflected from the object Ultrasonics - by transmitting ultrasonic waves, the distance of the object is determined from the ultrasonic waves reflected from the object Lidar - Determines the distance of an object by scanning the laser reflected from an object Flight time - use the camera to measure the distance of the infrared beam from the object back to the sensor to determine the distance of the object Structured Light - Projects a known light pattern onto an object, such as through TI's Digital Light Processing (DLP) device. The camera will then capture the deformed light map and analyze it to determine the distance of the object. In order to provide enhanced accuracy, reliability, and durability in a variety of different situations, it is often necessary to use at least one sensor to view the same scene. All sensor technologies have their inherent limitations and advantages. Different sensor technologies can be combined to fuse data from different sensors in the same scene, providing a more robust solution that compares view confusion through data fusion. A typical example of this is the combination of visible light sensors and radar. Visible light sensing The benefits include high resolution, the ability to identify and classify objects, and the ability to provide important information. However, their performance is affected by natural light intensity and weather (such as fog, rain and snow). In addition, other factors such as overheating may cause the quality of the image to decrease due to the influence of noise. The precision image processing provided by TI's processor can alleviate some of these effects. On the other hand, radar can pass through rain and snow, and the distance can be measured very quickly and efficiently. The Doppler radar has the added advantage of being able to detect the motion of an object. However, the resolution of the radar is low and the object cannot be easily identified. Therefore, the fusion of data from visible light and radar provides a more robust solution in a variety of different situations. In addition, the cost varies between different sensors, which also affects the best choice for a particular application. For example, Lidar (LIDAR) provides very accurate distance measurement, but at a much higher cost than passive image sensors. However, with the continuous development of technology, the cost will continue to decrease, and the car will eventually be able to look at the six directions and listen to it with the help of a variety of sensors. The TDA processor family is highly integrated and developed on a programmable platform that meets the high-intensity processing needs of automotive ADAS. Data from different sensors for viewing the scene can be provided to the TDA 2x and combined into more complete photos to help make quick and intelligent decisions. For example, a visual sensor may display a mailbox in a shape similar to a person in a darker scenario. TI's processor can perform sophisticated pedestrian detection. First, depending on the proportion of the object, the system may identify it as a pedestrian on the roadside. However, data from the thermal sensor will recognize that the temperature of the object is too low and is unlikely to be a living object, so it may not be a pedestrian. Therefore, sensors with different operating characteristics can provide a higher level of security. Regarding driverlessness, perhaps our ultimate goal is to create a completely autonomous car that will eventually lead to a world without traffic accidents. TI is actively focusing on the development of sensors and processing technologies to help customers develop unmanned vehicles. After a series of technological breakthroughs and continuous development, when we face unmanned R&D, the problem is no longer whether we can achieve it or not, but when we can achieve unmanned driving. Mono Cell Solar Panel,Monocrystalline Module,Perc Mono Solar Panel,Monocrystalline Solar Panel Price Wuxi Sunket New Energy Technology Co.,Ltd , https://www.sunketsolar.com